Table of Contents

Deep Learning vs. Machine Learning: What’s the Difference?

July 18, 2025

July 18, 2025

Deep learning vs machine learning—what’s the real difference and why does it matter?

If you’re asking this question, then you must have come across these two very related concepts and are confused about what differentiates them. You may also be curious about their implications for developing Artificial intelligence solutions that address real-world challenges.

Many people use “deep learning” and “machine learning” interchangeably because they are both subfields of Artificial intelligence. However, they are not the same.

Their differences lie in their architecture, capabilities, requirements, and use cases. And if you’re a developer, data scientist, business leader, or even just a tech enthusiast, understanding the difference between these two concepts is quite critical.

In this article, we provide answers to the common question, ‘What is deep learning vs machine learning?’ and we also give you some real-life instances of when to use deep learning vs machine learning, especially when building real-world solutions.

Let’s get into it

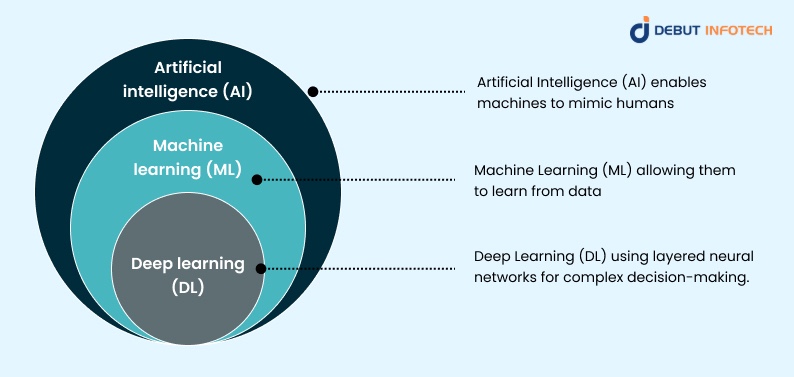

Understanding the Hierarchy: AI, Machine Learning, and Deep Learning

Understanding the key differences between the concepts starts with understanding their definition.

So, let’s start with some basic definitions.

- Artificial intelligence (AI): This is an umbrella term used for any technique that enables machines to mimic human behavior.

- Machine learning (ML): Machine learning is a subset of artificial intelligence that enables computers to learn from data and make decisions without being explicitly programmed.

- Deep learning (DL): This is a specialized branch of machine learning that utilizes neural networks with multiple layers to simulate human decision-making.

Machine learning relies on structured data and algorithms, such as decision trees, support vector machines, and linear regression, to function effectively.

On the other hand, deep learning excels with massive amounts of data and computational power. It requires minimal human supervision because it can build multi-layered neural networks that automatically extract features from raw data.

While they both share the same goal (automation, prediction, and classification), the methods they employ to achieve their goal and their capabilities differ significantly. Machine learning provides robust solutions for moderate data and computational requirements. However, when it comes to massive datasets that require significant resources, deep learning is the tool of choice.

Another major difference between machine learning and deep learning is how they layer functions within neural networks. In machine learning, there is typically one layer of analysis that processes hand-crafted features.

In deep learning, each subsequent layer builds on the preceding one, gradually abstracting complex patterns and enabling the system to understand more nuanced representations of the data. This depth makes deep learning more suitable for natural language processing and computer vision.

How They Work: Algorithms and Architectures of Deep Learning vs. Machine Learning

The key difference between the architectures of machine learning and deep learning lies in the level of human involvement.

Machine learning requires human engineers to identify relevant features and train models on labeled datasets.

Typically, these features are fed into algorithms such as logistic regression, decision trees, or k-nearest neighbors, which learn patterns and make predictions. This process involves a cycle of model tuning and evaluation that depends on human judgment for optimization.

A defining feature of machine learning is its ability to learn in a static manner. This means that models trained with machine learning stop learning once training is over. The static nature of learning can be both a blessing and a curse.

Here’s what that means: the advantage of this static learning is that it guarantees predictable outputs, while the limitation is that it is not very adaptable.

On the other hand, unlike machine learning, which adopts a traditional approach, deep learning has at its core Artificial Neural Networks (ANNs), which are inspired by the structure of the human brain. These ANNs are made up of input layers, multiple hidden layers, and output layers.

Neurons connect different layers to form a deep web of interactions. Additionally, the system learns through back propagation and gradient descent, adjusting the weights of connections based on errors in prediction.

Architectures in deep learning include Convolutional Neural Networks (CNNs) for processing images, Recurrent Neural Networks (RNNs) for sequence data like text and time series, and Generative Adversarial Networks (GANs) for generating content. These complex architectures enable deep learning to handle tasks that were previously impossible for machines to accomplish.

In recent years, transformer-based architectures—such as those used in BERT and GPT—have revolutionized the execution of NLP tasks by AI models. Transformers enable the parallel processing of data and facilitate better long-range dependency modeling. This offers significant improvements over traditional RNNs.

These deep learning models not only recognize patterns, but they also generate distinct outputs. For example, AI artists are using Generative Adversarial Networks to generate digital artwork, realistic avatars, and even synthesized voices. The versatility of deep learning makes it more useful across various industries compared to machine learning.

Build custom artificial intelligence models that help you reach your goals

Streamline business operations without breaking the bank with the help of our expert machine learning developers.

Machine Learning vs Deep Learning in terms of Data Requirements and Preprocessing

Machine learning does not function well with massive, unstructured data.

It is more suited for small to medium-sized structured datasets, as long as the data is clean and properly labelled. As such, it is essential to apply preprocessing steps, such as normalization, encoding, and feature selection, when training models.

These limitations of machine learning make feature engineering—the manual process of selecting and transforming variables—a cornerstone of a successful machine learning application.

With ML, preprocessing often involves a series of structured steps. Some of these include:

- Handling missing data

- Scaling numerical values

- Converting categorical variables into numerical form using techniques like one-hot encoding

- Reducing dimensionality to prevent overfitting.

Due to this manual involvement, the performance of ML models heavily relies on the quality of the preprocessing pipeline.

On the other hand, deep learning models are data hungry.

The more volume and variety of training data available, the more effective they are. They do very well with unstructured data like images, videos, and audio files, data types that are impossible for feature engineering to handle.

Instead of requiring manual selection of features, DL models automatically extract relevant features from raw data during training. As such, deep learning requires minimal human intervention, yet it remains a complex process.

It often includes data augmentation (especially in computer vision), normalization, tokenization (in NLP), and noise injection.

The aim is not just to prepare the data but to diversify it in a way that makes the model more robust and generalizable. While deep learning offers these benefits, it also comes with its own limitations.

Some common deep learning models include:

- Deep learning systems require thousands to millions of data points for training.

- They also require data augmentation techniques to enhance dataset diversity.

- They need powerful hardware (typically GPUs) to process data rapidly.

- With the increasing volume of datasets, data quality and ethical sourcing become a growing concern, especially in sensitive applications such as facial recognition or sentiment analysis.

Read also our another blog on Building and Deploying Machine Learning Pipelines: A Comprehensive Tutorial

Use Cases and Applications of Deep Learning vs. Machine Learning

Machine learning excels in industries where there is a large amount of structured data and explainability is crucial.

For example, with the help of an AI development company, you can use machine learning in finance to assist in credit scoring, fraud detection, and trading by utilizing algorithms to predict market trends. Machine learning is also used in healthcare to predict patient risk, recommend treatment, and diagnose diseases.

Additionally, machine learning is used in Retail and e-commerce to segment customers, manage inventory, predict demand, and also in recommendation engines. In streaming services, machine learning is used to personalize suggestions based on user behavior.

For example, Netflix and Amazon use collaborative filtering and clustering algorithms to suggest content or products based on user behavior. Furthermore, ML is used in agriculture to predict yield, monitor crops, and optimize resources using structured data from sensors and weather stations.

On the other hand, deep learning excels with unstructured data and complex tasks that require pattern recognition. Deep learning powers self-driving cars, which utilize CNNs to process images for lane detection and obstacle avoidance. It is also used in medical imaging to identify cancer cells and detect fractures with the same accuracy as trained radiologists. Furthermore, deep learning facilitates robotic surgery.

This is made possible by its ability to process visual inputs in real-time. Moreover, deep learning powers NLP applications, such as Google Translate, GPT-powered chatbots, and voice assistants like Siri and Alexa. It is also used in cybersecurity to detect sophisticated threats that cannot be detected using traditional rule-based systems.

It does this by analyzing logs and traffic patterns. Deep learning’s ability to model sequences and anomalies makes it a very useful tool in detecting fraudulent activities in financial institutions.

Furthermore, deep learning enables AI agents to learn advanced strategies in complex games like Go and StarCraft. These AI agents often outperform human players. In the entertainment industry, creatives utilize Generative Adversarial Networks (GANs) to generate photorealistic graphics and animations.

They also use deep learning to generate content, compose music, and create visual effects. Besides that, deep learning can also be used in climate modelling, self-flying drones, and smart manufacturing, where it is used to detect anomalies, predict future maintenance, and to optimize manufacturing processes.

Training and Learning Processes

To train machine learning models, engineers have to split the data into training, validation, and testing sets. The model learns from the training data, tunes its parameters using the validation data, and is evaluated on the test data. Training is usually rapid, especially if the model is basic. Iterative improvements are often made using techniques such as cross-validation and feature engineering.

Developers execute hyperparameter tuning in machine learning using techniques such as grid search or Bayesian optimization, and the computation cost is easy to manage—standard laptops or cloud-based CPU machines can handle the task.

Deep learning models, in contrast, require a more complex training cycle. They are trained in multiple epochs, where the model iteratively processes the full training dataset to refine its weights. The process uses backpropagation, where gradients are computed and propagated backward through the network to minimize a loss function.

Because DL models are prone to overfitting, it is essential to employ regularization techniques such as dropout (randomly deactivating neurons during training) and batch normalization (standardizing inputs for each layer). Additionally, developers often use transfer learning to reduce training time and computational load.

Moreover, DL training often requires high-performance computing environments, including multi-GPU setups or TPUs, particularly for large datasets and complex models such as transformers. Training depends on the data size and the complexity of the model. It can vary from a few hours to several weeks.

Related Read: The Impact of Deep Learning on Predictive Analytics.

Recent advancements in distributed training, mixed precision training and memory optimization are helping to reduce deep learning training without affecting accuracy. This facilitates enterprise-scale deployment across a range of industries.

Advantages and Limitations

Machine learning is simple, efficient, and interpretable, especially when the data is linear or mildly non-linear. It is also practical if transparency is a priority because machine learning models are easy to audit, explain, and maintain.

Additionally, machine learning requires less computational power and memory, making it the ideal fit for environments with limited resources.

However, machine learning struggles with handling unstructured data, such as images, audio, and video. Additionally, manual feature engineering is time-consuming and demands domain knowledge. Finally, the dependence on structured data means that machine learning does not adapt well to unprocessed, real-world data.

These limitations are not present in deep learning. It can handle large amounts of high-dimensional, unstructured data with minimal to no feature engineering. Deep learning models are well adapted for computer vision, speech recognition, and natural language processing. Finally, they work better with end-to-end learning pipelines, thereby eliminating the need for handcrafted input-output pipelines.

While Deep learning is an upgrade on machine learning, it also comes with its own unique challenges. For one, they are often difficult to interpret and susceptible to biases inherent in the training data. Additionally, they require significant computational resources, high-quality labelled datasets, and longer training times. Furthermore, small modifications in input can cause deep learning models to produce unreliable results. This is a cause for concern, especially in fields such as autonomous driving and healthcare, where precision is crucial. Finally, energy consumption is a major concern, especially in the context of the recent push for cleaner energy.

When to Use Which: Choosing Between ML and DL

Ultimately, your choices depend on the problem at hand, the available data, and the resources available. If you’re working with structured data and you require transparency, machine learning is the better choice because it delivers fast, explainable insights and is not resource-intensive.

However, if your data is unstructured and massive (for example, video streams, sensor feeds, or text logs), deep learning is the right option for you. You should also choose deep learning if your task involves image recognition, speech synthesis, or translation. Deep learning outperforms machine learning for these operations.

Another thing to consider is the development lifecycle. Machine learning models are much quicker to train and iterate.

Deep learning models, on the other hand, take a longer period, require extensive tuning, and resource-intensive hardware. This computational requirement translates into increased expenses. It is advisable that start-ups use machine learning to reduce costs.

These days, many developers are adopting a hybrid approach. For example, deep learning models can be used to extract features from raw data, which are then fed into machine learning models used for classification prediction.

Optimize your business processes with artificial intelligence solutions built to cater to your unique needs

Contact us for artificial intelligence development today

Conclusion

The primary difference between machine learning and deep learning lies in their architecture, the type of data they utilize, computational power, use cases, and available resources.

At the end of the day, the choice you make depends on your unique objective.

However, for both systems to work optimally, they need to be trained on the right data, particularly deep learning, because it is hard to interpret and also prone to biases.

To guarantee optimal performance, you need expert insights from the right Machine Learning Consulting Firms. They know the right models for your unique business needs. More importantly, they can help you design, train, and deploy models that align with your objectives while keeping risk to the minimum.

This is exactly what we do at Debut Infotech Pvt Ltd. Our machine learning experts are always available to give you the right guidance.

Schedule a consultation to inspire trust and gain long-term value from your investors today!

Frequently Asked Questions

A. It depends on what you want to do.

Machine learning is particularly well-suited for tasks that involve simple, structured, and labeled data. On the other hand, you should use deep learning for layered tasks that require machines to use structured data.

A. Machine learning models are faster to train than deep learning models. However, deep learning models typically excel at complex tasks such as image generation, voice recognition, and natural language processing. So they are much faster at executing these tasks.

A. Netflix is a streaming service, but it utilizes several machine learning techniques to power various aspects of its platform. For example, Netflix uses machine learning to personalize content recommendations, improve streaming quality, and guarantee a positive user experience.

A. Deep learning has numerous applications, including image recognition and natural language processing. It is also used in spam filters and customer support chatbots to help interpret content.

A. ChatGPT is an advanced example of deep learning technology, which is a specific subset of machine learning. It is an advanced example of deep learning technology, using multi-layered neural networks (transformers) to comprehend and produce writing that is human-like.

Our Latest Insights

Leave a Comment