Table of Contents

How to Build a Generative AI Model for Image Synthesis

June 19, 2025

June 19, 2025

Have you ever used an AI tool to generate flawless images?

If you haven’t, you’ve probably seen the flurry of unimaginably high-resolution images online recently. We’re at that stage of the internet where separating the truth from the lie is almost impossible due to the enormous ability of AI tools to generate awesome imagery based on just a few prompts.

Do you know what’s responsible for this huge innovation?

Generative AI models!

If you’ve been looking for how to build one of those magical tools, this article is for you.

As an executive or business leader, understanding how to harness the power of generative AI imagery can unlock new levels of creativity, efficiency, and competitive advantage for your enterprise. From marketing to product design, these 21st-century wonders can bring so much innovation and efficiency to any industry.

So, in this article, we’re cutting through the technical jargon to give you a clear, actionable guide for building your own generative AI models for image synthesis.

But first, we’ll provide some background information on generative AI models, the meaning of deep generative models image synthesis, and some different types of generative AI models you can use for image synthesis. Most importantly, we’ll share 8 practical steps on how to build a generative AI model for image synthesis in your organization.

Let’s explore the concept of generative AI imagery!

What is a Generative AI Model?

These are complex machine learning models that utilize complex algorithms to produce (generate) new data or innovative ideas similar to their training data. As such, they are very capable of producing high-quality and complex images using pre-existing images (training data) as inspiration. These AI models can either be unsupervised or semi-supervised in nature.

In the context of image synthesis, generative AI models need to be trained with large datasets of images to produce new, realistic, and high-quality images that mimic these training datasets. The bulk of the processes involved in this generation are based on deep learning, a subset of machine learning that involves the identification of complex patterns, textures, and structures within images. That’s how generative AI models learn from and mimic existing images.

However, generative AI models can do more than just generate new images. They can also improve the quality of low-resolution images, manipulate existing images, and create innovative visuals for several business purposes.

What is Image Synthesis?

Although it sounds pretty self-explanatory, some background information on deep generative models image synthesis is still needed.

It is the process of producing new images artificially with the help of an AI model and existing image data. It involves creating visual content that may not necessarily exist in real life but appears realistic and contextually relevant. This could involve anything from simple image manipulations to fully generating complex scenes or objects using advanced AI tools like the generative AI models discussed above.

With the help of these generative AI models, businesses and content creators can create attention-grabbing visuals that maintain the style, structure, and content characteristics of the original images. And that’s a good thing for these creators and businesses because they get to automate and scale the creation of visual assets without the stress and time requirements of manual design.

So, what do these images model do for businesses?

Think about it: marketing, product design, product development, and virtual reality teams can start generating tangible visual ideas in little or no time, thus speeding up innovation, ideation, and execution.

So, image synthesis goes far beyond ‘just creating images.’ Rather, it represents a great opportunity for businesses to generate tailored, scalable, and realistic visual content that drives competitive advantage, and generative AI models are the sophisticated technologies that make it happen.

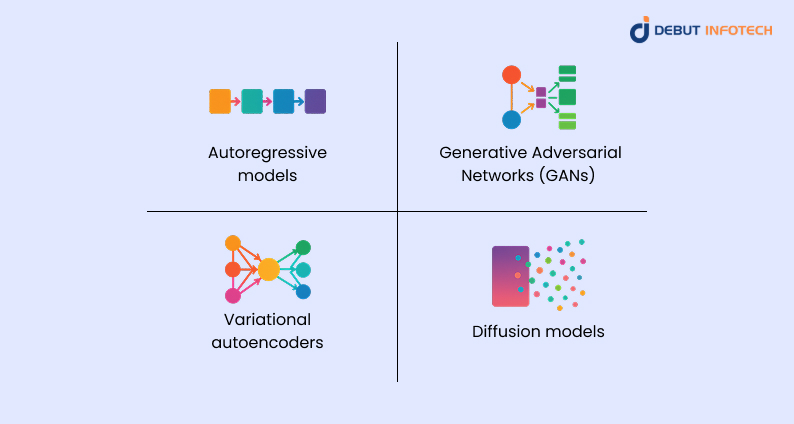

Types of Generative AI Models for Image Synthesis

Different categories of generative AI models can be used for image synthesis. As a business leader or executive looking to build the right image creation models, it is advisable to know the different options available and how they work.

Some of them include the following:

1. Autoregressive models

An autoregressive model is a generative AI model that produces new images sequentially, predicting each pixel or patch based on the parts the same model has generated previously. In simpler terms, it’s like an AI model that learns from itself sequentially.

This sequential mode of image generation makes them perfectly suited for generating images that require intricate detailing. We are talking about use cases such as picture inpainting and super-resolution images.

One other standout attribute of autoregressive models is that they can be quite slow because they are generating the pixels sequentially. However, what they lack in speed, they make up for in quality, thus making them highly suitable for generating highly realistic images.

2. Generative Adversarial Networks (GANs)

Generative Adversarial Networks GANS consists of two neural networks —a generator and a discriminator—both of which compete in the image generation process, just like a video game. The generator tries to fool the discriminator when creating images, while the discriminator’s role is to differentiate real images from generated ones. That’s the origin of the keyword, ‘adversarial.’ Both neural networks try to ‘go against’ each other in the image generation process to ensure that the entire model as a whole ends up creating a highly realistic image with intricate features such as texture and patterns.

3. Variational autoencoders

Variational autoencoders are generative AI models that synthesize images with the help of two major components: encoders and decoders. This AI model compresses an image into a smaller, more meaningful representation before reconstructing it into the final version. The compression process is known as encoding, while the reconstruction process is decoding.

In the process of encoding and decoding an image (the training data), the variational autoencoder learns the key features defining the image and also learns how to generate new images by sampling variations of the patterns it has learnt.

The fact that they learn the patterns during this process makes them ideal for generating highly realistic images. However, they definitely need to learn from highly realistic pictures (high-quality training data), and this often takes a long time to create. Nonetheless, variational autoencoders are highly suited for creating computer graphics and medical imaging, making them ideal options for healthcare and computer applications.

4. Diffusion models

These are generative AI models that work by gradually adding noise to images (training data) until they transform into random static. They then work their way back to the clear image by gradually reversing the noise addition process to generate a clear image from noise. Once they learn how to do that independently, they leverage the step-by-step denoising process to create highly detailed, ultra-realistic images.

Diffusion models are currently being used in popular AI art creation tools to enable precise control over the image generation process.

Depending on your organization’s image synthesis requirements, you could create any one of these categories of generative AI models to handle the whole process.

If you would like to learn how to build any of these image creation models, jump to the next section.

Unlock Next-Level Image Quality with Expert AI Guidance

Partner with our specialists to build generative AI models that deliver stunning, high-quality images tailored to your business needs.

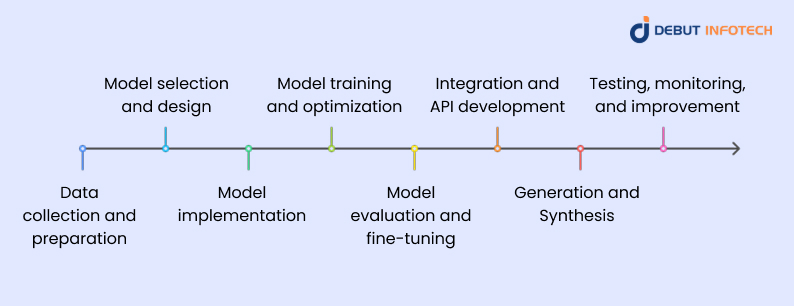

Building a Generative AI Model for Image Synthesis in 8 Practical Steps

Like most generative AI models, building a model for image synthesis requires a systematic approach.

Follow these steps to get your desired model.

1. Data collection and preparation

As you might have noticed with all the generative AI models discussed, the image generation process relies heavily on data. Therefore, the first step to building generative AI models is data collection and preparation.

What does this mean?

It means gathering clean and structured data (high-quality images) from multiple sources to build a robust training foundation. The model you’re about to build will learn patterns, textures, and other intricate details from the image data. Therefore, you must ensure the data you’re sourcing is of the very best quality.

Furthermore, your data preparation process should involve standardizing the data (image) resolution, sizes, and format to enhance the model’s generalization capabilities. This will help you enhance the model’s accuracy and performance from the outset.

2. Model selection and design

Having gathered enough high-quality data, you now have to choose the type of generative AI model you want to build. This depends heavily on the kinds of synthesized image you aim to create and the kind of data you have gathered.

Your options include the generative adversarial networks (GANs), diffusion models, variational autoencoders, and autoregressive models mentioned earlier. It is advisable to ensure that your final choice has both contextual intelligence and real-time decision-making capabilities so that you can achieve the best outcomes.

3. Model implementation

It’s time to start preparing for image creation.

The model implementation process involves setting up the necessary infrastructure for deploying the trained model in a real-world context. You would also have to integrate the model with a real-world environment and your existing systems to mimic how you would use it within your organization.

Furthermore, the model implementation process encompasses implementing real-time generation and monitoring to ensure proper performance optimization. Most of the activities occurring at this implementation stage are geared towards ensuring the model runs smoothly. For instance, you would have to make provisions for scalability so that the model can accommodate increasing demands as your organizational needs increase.

4. Model training and optimization

The model training and optimization phase involves feeding the sourced data to the implemented model. For more context, this is when a variational autoencoder, for instance, encodes and decodes the training data. Likewise, this is the denoising process if you decide to build a diffusion model.

While doing this, it is advisable to iteratively update the model’s parameters so that the difference between the generated and synthesized image can be greatly minimized. Basically, this means making sure that the image you’re generating is as close to reality as possible.

Now, the length of this training and optimization process depends heavily on the model complexity and the level of image quality you’re hoping to achieve.

5. Model evaluation and fine-tuning

Model evaluation and fine-tuning are closely related to the training and optimization process described above. After adjusting your parameters as required and training the model in general, you would have to assess the model’s performance based on pre-set metrics such as inception score and Frechet inception distance.

Depending on your level of satisfaction with the synthesized image, you can now apply various fine-tuning techniques to adjust the hyperparameters (the ones you updated iteratively when training the model initially). It also involves changing the architectures and augmenting the training datasets to adjust your image outputs as close to realistic as possible.

6. Integration and API development

Once the generative AI model’s output meets your expectations, it is time to start integrating it into your existing systems or apps via REST APIs, SDKs, or edge devices. It is very important to ensure this is done seamlessly without disrupting existing operations in the backend.

Furthermore, the integration and API development process must allow real-time data processing and intelligent automation to ensure that your final product is a seamless image synthesis app or system.

7. Generation and Synthesis

With the models now fully integrated, you can generate the images you want as you would during random everyday use.

Ensure everything is working as expected by using random prompts an ordinary user would use to generate an image to be sure that the generative AI model you have just created can indeed match up with everyday applications. You also want to make sure that you confirm if the generative AI model’s output is unique images totally different from the original training dataset. This is the ultimate test that the generative AI model is capable of producing novel images by learning from any given training dataset.

8. Testing, monitoring, and improvement

Finally, conduct rigorous validation checks using traditional testing procedures, such as simple A/B tests, confusion matrices, and performance benchmarks.

Monitor all components of the generative AI model as you do this to ensure that all the neural networks and other app components are functioning according to your expectations. And even after deploying, you still need to look out for user feedback, model drifts, and interference performances to make sure that you can fine-tune the entire AI model according to your business needs and requirements.

Once you’re comfortable with the output after full deployment, it is safe to say you have just successfully built and launched a generative AI model for image synthesis.

Start Creating Exceptional AI-Generated Images Today

Connect with our AI development experts to craft generative models that produce images of unmatched quality.

Conclusion

If you would like to build a generative AI model that surpasses your expectations in terms of image synthesis, you have to approach it strategically and methodically.

It begins with gathering the right data and selecting the appropriate model architecture matching your expectations. Next, you train it with the gathered datasets before integrating it into your existing systems. With close monitoring, evaluation, and fine-tuning, you should be able to assess its performance, adjust your parameters, and ensure the model matches your expectations.

Once you have that, all that is left is testing and post-deployment support.

Still sounding like a lot?

Partnering with an expert generative AI development company can make all the difference. Our team offers specialized guidance—from data strategy and model selection to training, deployment, and ongoing optimization—helping you avoid common pitfalls and maximize ROI. We ensure your generative AI model aligns with your unique business objectives and scales effectively as your needs evolve.

Consult with our generative AI consultants today and start building your ideal generative AI model!

Frequently Asked Questions (FAQs)

A. AI image synthesis is the method of creating fresh digital photographs from scratch with artificial intelligence algorithms. Without using manual design or conventional photography, these models generate realistic or artistic graphics by learning patterns from massive datasets.

A. No, rendering is the process of producing images from 3D models or scenery by simulating light and materials. In contrast, image synthesis is the creation of new images utilizing AI and learned data patterns. Rendering turns pre-existing models into pictures, while synthesis turns data into pictures.

A. It involves gathering large, varied datasets, choosing suitable architectures such as diffusion models or GANs, and training neural networks to recognize patterns in the data. Through iterative learning, the model gradually gets better at producing realistic images.

A. The process of training involves providing the model with an extensive image dataset so that it may repeatedly learn patterns and features. Methods like denoising (diffusion models) and adversarial training (GANs) reduce errors and enable the model to produce more realistic images.

A. In particular, image synthesis is the process of producing images by fusing rendered techniques and learning content, frequently using artificial intelligence. Any process of creating new images, including synthesis, text-to-image, and procedural generation techniques, is referred to as image generation.

Our Latest Insights

Leave a Comment