Table of Contents

How To Enhance AI Agent Security With ZKPs: Build Trust In Autonomous Intelligence

December 30, 2025

December 30, 2025

AI agents can truly do amazing things.

But can you really trust them to make decisions on your behalf? I mean, are you sure they are truly acting without bias or compromising sensitive data when you are not supervising them?

That little hesitation right there is the hallmark of the big problem of AI agents in the modern era.

Yes, traditional security measures such as encryption, authentication, and firewalls have been doing an awesome job of protecting the data around an AI system. But what happens to the data inside the AI agent? After all, they can’t confirm that an AI agent made ethical, compliant, or authorised choices. In a business climate where decisions are decentralised and data flows between countless digital actors, trust must evolve from assumption to verification.

This is where Zero-Knowledge Proofs (ZKPs) come in. ZKPs allow AI agents to prove their integrity, authenticity, and compliance—mathematically—without revealing sensitive information.

In this article, we’ll explore:

- What AI agent security really means in business terms.

- How Zero-Knowledge Proofs bridge privacy and accountability.

- Practical ways to strengthen multi AI agent security technology with ZKPs.

- A step-by-step guide to building a ZKP-enhanced AI security framework for your enterprise.

At Debut Infotech Pvt Ltd, we believe trust isn’t an afterthought—it’s the architecture of intelligent systems, and we are about to show you why in this article.

Understanding AI Agent Security in Simple Terms

First things first: AI agents are autonomous systems capable of receiving and processing data, making decisions, and acting with minimal human intervention. In the early days of the AI revolution, they were mere static algorithms capable of performing simple tasks. But today, they are quietly powering everything from automated research assistants to dynamic supply-chain optimisers.

These “agents” no longer just respond to commands. They now interpret a user’s intent, collaborate with other agents, and even negotiate outcomes in complex digital ecosystems.

Awesome, right?

But here’s the catch: while AI agents have significantly gained more autonomy, that level of autonomy comes with a new category of risk.

You see, when several agents operate together, in what’s called a multi ai agent security technology, they exchange information, trigger actions, and sometimes make decisions with financial or legal consequences. But how do you trust those interactions? I mean, how can a business confirm that an AI agent is who it claims to be, or that it handled data responsibly, without exposing that data?

This uncertainty is the core of AI agent security.

AI agent security concerns safeguarding not just the data an agent uses, but also its identity, behaviour, and integrity within a network.

Traditional cybersecurity controls, such as firewalls and encryption, protect data “at rest” or “in transit.” Yet they struggle with what happens inside the AI logic: how models reason, learn, and communicate once deployed.

Consider a real scenario. A retail enterprise uses an AI agent to conduct a security survey across hundreds of partner systems. If that agent were compromised, it could misreport findings or leak sensitive insights. That’s why top-notch AI development services like Debut Infotech are helping enterprises like yours build multi-agent AI security systems. These frameworks enable each agent to prove its authenticity, verify its data sources, and validate its outputs without disclosing proprietary information. That means embedding cryptographic and behavioural safeguards directly into the agents’ workflows, ensuring both compliance and accountability.

But how can AI agents actually prove trustworthiness without exposing their logic or data? That’s where an emerging cryptographic breakthrough known as Zero-Knowledge Proofs is transforming how organisations think about verification and privacy.

Skip to the next section to understand what these mean.

Related Read: Federated AI Agents: Privacy-Centric Automation

Zero-Knowledge Proofs: The Key to Verifiable AI Trust

Trust is arguably the most important thing when it comes to autonomous AI.

A Zero-Knowledge Proof is a cryptographic technique or protocol that enables one entity, the prover, to convince another, the verifier, that a statement is true without revealing any underlying information. It is just like confirming that a person holds a valid passport without them revealing their passport number or personal details to you.

Now, replace that person with an AI agent processing private or regulated data. The same concept applies. The agent can prove it accessed only authorised data, applied approved logic, or complied with a governance rule without exposing the data, the algorithm, or any proprietary information.

But how is that even possible?

Normally, we know that encryption protocols are used to protect data at rest or in motion. However, these same encryption protocols are often incapable of verifying what an AI agent did with that data whenever it uses it for its operations.

But with ZKPs, AI agents can bridge that gap by providing mathematical assurances that their processes comply with regulatory policies, privacy protocols, and ethical requirements, without even decrypting anything.

What does this mean in technical terms?

ZKPs are grounded in three core principles: completeness (valid proofs verify correctly), soundness (false claims are caught), and zero-knowledge (no extra information leaks).

So, how does this matter for your whole enterprise as an executive?

This is very vital because, as a business, it is important to shift from passive data protection to active verification—and ZKPs are key to that evolution. In fact, innovative AI agent companies such as Debut Infotech Pvt Ltd are already embedding ZKP protocols into enterprise AI systems. This enables organisations to validate compliance, authenticity, and decision integrity without sacrificing control over their data.

Of course, Zero-Knowledge Proofs don’t magically fix bad models or governance gaps. They’re only as strong as the rules you decide to enforce. But they finally offer a practical way to build AI ecosystems where trust isn’t assumed; instead, it’s mathematically proven.

And that leads us to the next big question: What, exactly, can AI agents prove to make themselves truly secure?

Build AI Agents You Can Truly Trust

Empower your business with autonomous AI agents that are secure, compliant, and verifiably trustworthy. Our ZKP-enhanced AI solutions combine intelligence with proof.

How ZKPs Strengthen AI Agent Security

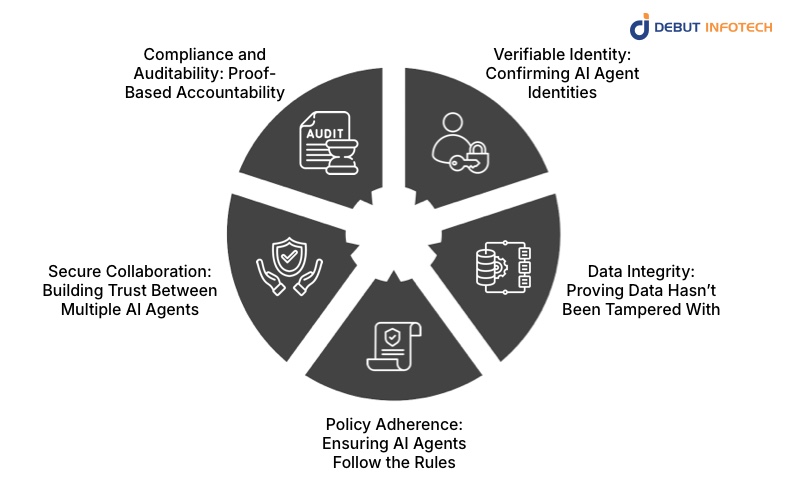

Using ZKPs guarantees the identity, data integrity, behavioural trust, and compliance of AI agents, and makes them more provably trustworthy.

The following are some more tangible ways through which they strengthen AI security:

1. Verifiable Identity: Confirming AI Agent Identities

There are usually multiple AI entities in a multi-agent ecosystem, all of which may be exchanging data, negotiating transactions, or automating compliance tasks.

While this makes the AI agent system more productive, it also carries the significant risk of malicious agents masquerading as authentic ones.

ZKPs solve this elegantly. Each agent can cryptographically prove its identity and authorisation without exposing its private keys or credentials. It’s like digital identification with built-in privacy. This is essential in financial networks or healthcare systems where identity misuse could have severe consequences.

2. Data Integrity: Proving Data Hasn’t Been Tampered With

AI agents are only as reliable as the data they consume. But once data starts moving across departments, partners, or even autonomous nodes, how do you know it hasn’t been altered?

ZKPs help to prove that this data hasn’t been tampered with by making the data lineage verifiable. This means that each agent can attach cryptographic proofs that confirm the data’s authenticity and integrity, even as it travels between systems. That’s why industries like insurance, supply chain, and government analytics, which handle regulated or confidential data, are exploring agentic AI for secure data handling using ZKP-based validation frameworks.

3. Policy Adherence: Ensuring AI Agents Follow the Rules

Here’s where the magic of ZKPs meets governance. Imagine confirming that an AI agent followed every operational, ethical, or compliance rule, without you having to inspect its algorithm or datasets.

That is exactly what ZKPs do when they mathematically prove that an AI’s decision path conforms to defined policies or protocols. As a result, it becomes very easy for organisations to verify the agent’s compliance with internal ethics guidelines and external regulations without ever having to share their proprietary data.

4. Secure Collaboration: Building Trust Between Multiple AI Agents

It is rare for modern organisations to function independently. Therefore, in collaborative ecosystems like financial networks, logistics, or federated AI training models, agents must cooperate across divisions, subsidiaries, and even rival businesses.

ZKPs allow these agents to exchange proof of correctness or fairness without revealing private data. In other words, two competing agents can cooperate, verify each other’s outputs, and still protect their proprietary models.

This capability is at the core of multi AI agent security technology — making decentralised, cooperative AI possible without sacrificing data sovereignty or privacy.

5. Compliance and Auditability: Proof-Based Accountability

Invasive data access and thorough manual checks are part of traditional AI audits. ZKPs allow auditors or regulators to quickly and statistically, rather than by hand, confirm compliance evidence.

By doing this, compliance is transformed from a bottleneck into a real-time assurance system that satisfies ethical and legal requirements while protecting privacy. That’s more than simply a checkbox for highly regulated industries; it’s a competitive advantage.

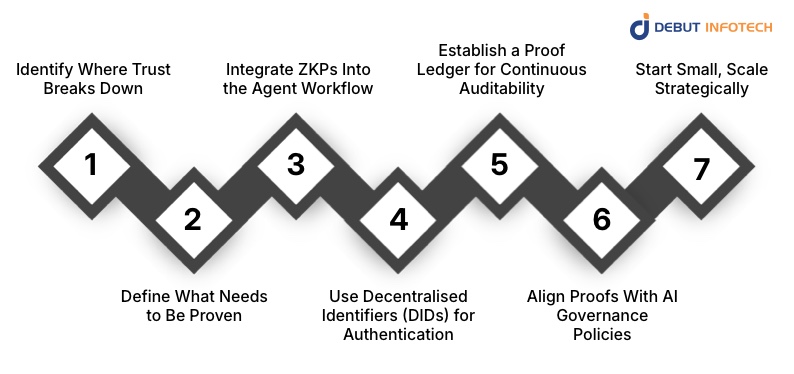

Building a ZKP-Enhanced AI Security Framework

Knowing what ZKPs can do is one thing. The real value lies in understanding how to integrate them into the everyday life of your AI systems. A Zero-Knowledge Proof layer doesn’t replace your existing security stack—it strengthens it. It makes AI workflows verifiable, private, and auditable from end to end.

The good news is that building such a framework isn’t science fiction anymore. With the right expertise and planning, organisations can start small and scale fast.

1. Identify Where Trust Breaks Down

Every AI ecosystem has “trust gaps.” These are places where you currently assume correctness instead of verifying it.

Common examples include:

- Data sources feeding your AI models.

- The decision-making logic inside autonomous agents.

- The interactions between multiple AI agents exchanging information.

Start by mapping these checkpoints. Each one represents an opportunity for ZKPs to turn assumptions into proofs.

2. Define What Needs to Be Proven

ZKPs work by proving specific statements, so clarity matters. Decide what “truths” you want your agents to verify:

- Identity — proving who the agent is.

- Integrity — proving data hasn’t been changed.

- Policy adherence — proving an agent followed internal rules or regulations.

- Outcome verification — proving results were derived correctly.

Your proofs will be more significant if your definitions are stronger. Organisations frequently begin with one high-risk area, such as data interchange or regulatory reporting, and then grow it after the method is proven to work.

3. Integrate ZKPs Into the Agent Workflow

This is where technology meets design. The agent’s logic can directly incorporate ZKP protocols. An agent can automatically produce a cryptographic proof of correctness each time it completes a crucial activity, such as retrieving facts, predicting outcomes, or distributing them.

Through this linkage, verification is guaranteed to occur regularly rather than as an afterthought.

4. Use Decentralised Identifiers (DIDs) for Authentication

ZKPs and DIDs work together to give AI agents a tamper-resistant identity layer.

Without disclosing the agent’s private key, each agent has a distinct, mathematically verifiable credential. DIDs, when used in conjunction with ZKPs, ensure that only verified agents can exchange information, conduct transactions, or initiate automated processes.

In multi-AI-agent security technology, this concept is quickly emerging as a best practice, particularly when numerous stakeholders communicate across networks.

5. Establish a Proof Ledger for Continuous Auditability

A “proof ledger” is a safe, append-only record (off-chain or on-chain) that holds verification proofs rather than raw data, which is advantageous for businesses that depend on frequent audits.

This ledger may be queried in real time by auditors and authorities to verify compliance, significantly reducing audit cycles and human review.

The result: transparency without exposure, accountability without intrusion.

6. Align Proofs With AI Governance Policies

ZKPs are most powerful when tied to governance. Your ethical rules, data-use policies, and compliance mandates should define what gets proven. Debut Infotech often helps clients map ZKP checkpoints directly to governance frameworks—turning static policy documents into live, verifiable controls.

This alignment ensures that AI trust isn’t just declared; it’s continuously demonstrated.

7. Start Small, Scale Strategically

Like any emerging technology, the key is to start with focus. Run a pilot in a process that:

- Handles sensitive data,

- Faces regulatory scrutiny, or

- Requires multi-party trust.

The ZKP model can be expanded enterprise-wide after the return on trust—shorter audits, clearer compliance logs, and fewer delays in data sharing—has been confirmed.

Adopting ZKPs becomes not only possible but also prudent from a budgetary standpoint as open-source frameworks develop and the cost of cryptographic operations continues to decline.

Enhance Your AI Security with Verifiable Trust

Connect with our experts to explore a ZKP-enhanced AI development solution that secures your autonomous agents and safeguards your data integrity.

Conclusion: The Path Toward Trustworthy AI Autonomy

As AI agents take on more critical business roles, the question is no longer how capable they are, but how verifiable their actions can be. AI agent security is now about proving that intelligence can operate independently without abandoning oversight.

Zero-Knowledge Proofs (ZKPs) offer a rare kind of balance in that equation. They empower organisations to validate identity, data integrity, and compliance without sacrificing privacy or exposing intellectual property. In doing so, ZKPs move security beyond mere protection—they make it an enabler of confidence, compliance, and collaboration.

For business leaders, the message is clear: trust must be built into your AI systems, not bolted on later. And the time to start is now.

At Debut Infotech Pvt Ltd, our AI development teams are already helping enterprises design and deploy agentic AI for secure data handling—integrating ZKP frameworks that make autonomous systems provably trustworthy. Whether you’re modernising data pipelines, building multi-agent ecosystems, or preparing for regulatory AI governance, Debut Infotech helps you architect intelligence you can actually trust.

Because in the age of autonomous intelligence, real innovation begins where trust becomes verifiable.

Frequently Asked Questions (FAQs)

Without disclosing private information, ZKP-enhanced AI software may demonstrate data integrity, authenticate agents, and validate compliance. It helps companies preserve privacy and transparency simultaneously by transforming AI from “black box” behaviour to verified trust.

Data is encrypted, while ZKPs demonstrate accuracy. Secondly, data usage and policy compliance cannot be confirmed solely through encryption, whereas ZKPs are ideal for regulated sectors controlling agentic AI for secure data handling, as they mathematically verify AI behaviour while maintaining data privacy.

Many AI systems exchange information and make decisions autonomously in multi-agent ecosystems. ZKPs allow agents to confirm fairness and validity without disclosing confidential information, facilitating safe departmental or organisational collaboration.

Indeed, Debut Infotech incorporates these protocols into production-ready AI settings, guaranteeing efficiency without sacrificing security or performance. Recent developments in zk-SNARKs and zk-STARKs have made proofs faster, lighter, and scalable for enterprise-grade AI.

Start by identifying trust gaps —places where AI judgments are based on conjecture rather than evidence. Next, test ZKP verification in workflows with high risk. These pilots may be designed into scalable frameworks with the assistance of partners like Debut Infotech Pvt Ltd, transforming AI security into a quantifiable competitive advantage.

Our Latest Insights

Leave a Comment