Table of Contents

Balancing Innovation and Ethics: The Responsibility of Developers Using Generative AI

September 22, 2025

September 22, 2025

The responsibility of developers using generative AI goes well beyond building innovative systems. It also covers ethical decision-making, safeguarding data, strengthening security, and addressing the wider societal effects of AI technologies.

With the global AI market projected to reach $2407 billion by 2032, developers play a central role in shaping how these tools are trusted and applied. Yet, concerns are growing, and 62% of Internet users are concerned about the organizational use of artificial intelligence, including problems like data privacy and bias. This demonstrates the urgency for developers to prioritize fairness, accountability, and sustainability.

By embedding responsibility into every stage of AI development, they ensure generative AI empowers society while minimizing risks. In this article, we will discuss the responsibility of developers using generative AI.

Ready to Build Responsible AI Systems?

Partner with us to develop generative AI solutions that prioritize ethics, ensuring your systems are fair, transparent, and aligned with global standards.

Ethical and Societal Responsibilities

1. Fairness and Bias Mitigation

Developers must actively design models that minimize bias, as unchecked algorithms can amplify existing inequalities. This responsibility involves using diverse datasets, conducting fairness audits, and deploying bias-detection tools. Beyond technical fixes, it also requires awareness of societal contexts that shape AI use. Fairness ensures generative AI systems remain inclusive, equitable, and reliable, strengthening long-term trust in technological applications.

2. User Privacy

Safeguarding user privacy demands more than anonymizing datasets—it requires embedding privacy-by-design principles from the start. When you hire generative AI developers, ensure that they implement differential privacy, minimize unnecessary data collection, and provide secure deletion options. Respecting privacy also means anticipating future risks, not just addressing current threats. This forward-looking approach ensures AI systems remain useful and respectful without exploiting user information or violating digital rights.

3. Transparency

Transparency means giving stakeholders a clear understanding of how generative AI systems work, including their strengths and limitations. Developers should publish model documentation, disclose training data sources when possible, and provide interpretability tools for end-users. By prioritizing openness, developers encourage accountability and trust. Transparent systems are less likely to be misused, as users are better equipped to identify limitations or risks.

4. Accountability

Accountability involves developers taking responsibility for both beneficial and harmful impacts of generative AI. This includes clearly defining decision-making roles within organizations, offering mechanisms for recourse when mistakes occur, and performing impact assessments. Developers cannot dismiss responsibility once a product is deployed; they must maintain oversight and ensure corrective actions. Accountability reinforces ethical AI practices and prevents harm from going unchecked.

5. Responsible Innovation

Innovation in generative AI should balance creativity with responsibility. Developers must carefully weigh potential societal consequences before releasing new tools. This includes scenario planning, red-teaming exercises, and embedding ethical review processes into development pipelines. By staying optimistic yet cautious, developers can unlock transformative opportunities without enabling harmful misuse. Responsible innovation ensures AI contributes positively to progress while maintaining public trust.

Related Read: Cost To Hire AI Developers: Hourly & Full‑Time Rates

Data and Security Responsibilities

1. Data Privacy

Protecting data privacy requires developers to adopt rigorous safeguards across collection, storage, and usage. Techniques like federated learning, encryption, and anonymization should be built into every system. Developers also need to minimize unnecessary data retention to limit risks. Responsible data handling strengthens user confidence and regulatory compliance, ensuring that generative AI operates with integrity while safeguarding sensitive personal and organizational information.

2. User Consent and Control

Gaining meaningful user consent goes beyond legal disclaimers; it requires clear communication and genuine choice. Developers should offer accessible privacy dashboards, provide transparent explanations of how data is used, and allow users to revoke consent easily. Empowering individuals with control over their data demonstrates respect for autonomy and strengthens trust. This responsibility highlights developers’ role in protecting users’ digital rights.

3. Security

Generative AI systems are attractive targets for malicious exploitation, making security a priority. Developers should design robust defenses, from secure coding practices to adversarial resilience testing. Proactive measures like anomaly detection and penetration testing help prevent data breaches or model manipulation. With cyber threats evolving rapidly, maintaining strong, future-ready security safeguards ensures AI systems remain reliable and safe for long-term adoption.

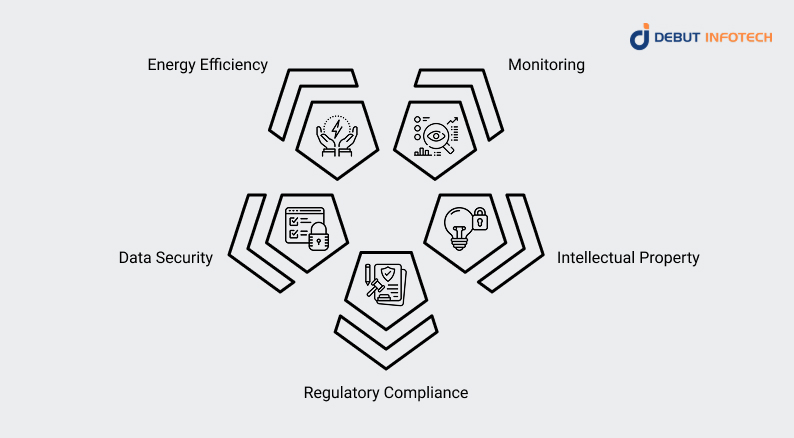

Technical and Operational Responsibilities

1. Energy Efficiency

Generative AI models require vast computing resources, which significantly impact energy consumption. Developers must explore efficient architectures, adopt model compression, and optimize training cycles to reduce carbon footprints. Leveraging renewable-powered data centers and green computing practices is equally important. Energy efficiency in AI is a cost-saving measure and a broader responsibility to align technological advancement with environmental sustainability.

2. Monitoring

Continuous monitoring ensures AI systems remain aligned with intended outcomes and resistant to misuse. Developers should implement real-time dashboards, anomaly detection mechanisms, and automated alerts to track performance. This vigilance allows for early intervention when issues arise, whether technical or ethical. Monitoring also helps in evaluating fairness, stability, and safety, ensuring that generative AI systems remain reliable throughout their operational lifecycle.

3. Data Security

Protecting data used in AI training and deployment is a forward-looking responsibility. Developers must safeguard against threats like data poisoning, unauthorized access, and adversarial attacks. Secure storage, encrypted pipelines, and regular vulnerability assessments are essential. Beyond technical defenses, anticipating future risks ensures that AI systems remain trustworthy. Strong data security fosters confidence among users, partners, and regulators in increasingly digital environments.

4. Intellectual Property

Generative AI often produces outputs influenced by copyrighted or proprietary data, raising intellectual property concerns. Developers must implement filters, licensing safeguards, and verification tools to avoid copyright violations. By doing so, they protect creators’ rights and prevent legal disputes. Respecting intellectual property also encourages ethical innovation, where AI assists human creativity without undermining ownership or profiting from unlicensed or unauthorized content.

5. Regulatory Compliance

Developers are obligated to design systems that adhere to evolving legal and regulatory frameworks. Compliance includes meeting global data protection standards, intellectual property laws, and AI-specific regulations. Building compliance checks into the development lifecycle prevents costly legal issues and strengthens stakeholder trust. A proactive approach ensures generative AI applications remain lawful, ethical, and safe in diverse operational and geographic contexts.

Collaborative and Educational Responsibilities

1. Cross-Disciplinary Collaboration

Generative AI requires expertise that spans technology, ethics, law, and social sciences. Developers benefit from collaborating with professionals in these fields to anticipate broader impacts and design responsible systems. Such forward-looking collaboration creates solutions that account for social and cultural nuances while minimizing risks. Interdisciplinary teamwork ensures AI evolves responsibly and enhances its value across industries and communities worldwide.

2. Testing and Validation

Thorough testing and validation are critical to responsible development. Developers must evaluate AI systems for accuracy, fairness, security, and ethical alignment across real-world scenarios. This means going beyond technical metrics to test for unintended consequences, misuse, or bias. Structured validation protocols ensure that generative AI functions as intended while safeguarding against harmful or misleading outcomes in live environments.

3. Human Oversight

Developers must ensure human oversight remains central to generative AI deployment. Automated systems should include mechanisms for human review, correction, and intervention to prevent misuse or errors. Oversight also builds accountability, ensuring critical decisions are not left solely to machines. Embedding human judgment into the AI lifecycle creates a safeguard that aligns technology with ethical, social, and legal expectations.

4. Fostering a Culture of Ethical Awareness

Building responsible AI requires more than technical safeguards; it demands a culture of ethical awareness. Developers or generative AI consultants should encourage dialogue, establish internal ethics committees, and provide training programs across teams.

By promoting shared responsibility, organizations can cultivate environments where ethical reflection informs daily decision-making. A forward-looking ethical culture ensures that generative AI serves human interests while avoiding irresponsible or harmful practices.

5. Continuous Learning and Skill Development

The rapid pace of AI innovation requires developers to engage in continuous learning. Keeping up with new algorithms, regulations, and ethical standards ensures competence and responsibility. This includes technical upskilling, attending workshops, and studying global AI policies. Ongoing development empowers professionals to adapt responsibly, ensuring generative AI systems evolve with both technological excellence and ethical integrity in mind.

Also Read: Top Generative AI Development Companies

Sustainability in AI Development: What is the Responsibility of Developers Using Generative AI?

1. Green Development Practices

Developers should integrate sustainability principles into AI workflows by using renewable-powered data centers, efficient coding practices, and eco-conscious infrastructure. Minimizing wasteful processes and optimizing resource allocation reduces environmental impact. By prioritizing green development, generative AI can advance responsibly while contributing to broader climate goals and sustainable innovation.

2. Energy Efficiency in AI Models

Training large AI models consumes enormous energy. Developers must design lightweight architectures, adopt pruning techniques, and explore hardware accelerators that optimize efficiency. A forward-looking focus on energy-conscious designs ensures AI remains powerful without becoming environmentally unsustainable. Energy-efficient models make technology more accessible while aligning with long-term sustainability values.

3. Long-Term Maintenance

Responsible AI development extends beyond deployment. Developers should plan for model updates, ongoing audits, and periodic retraining to maintain fairness, accuracy, and security. Regular maintenance prevents outdated systems from causing harm. Considering sustainability across the lifecycle ensures generative AI systems remain ethical, relevant, and aligned with evolving societal expectations.

Real-World Case Studies of Responsible Generative AI Development

Case Study 1: OpenAI’s GPT-4

OpenAI’s GPT-4 illustrates responsibility by publishing documentation on limitations, biases, and safe usage guidelines. This openness helps users understand the risks and benefits. By prioritizing transparency, OpenAI demonstrates how developers can acknowledge challenges early while strengthening accountability and public trust in advanced generative AI models.

Case Study 2: Google’s BERT

Google’s BERT reflects responsible development through carefully curated training datasets and attention to fairness. By embedding ethical considerations into design, BERT advanced natural language processing while reducing harmful biases. This forward-looking approach highlights how innovation can thrive responsibly, ensuring technology addresses global needs without amplifying inequities.

Case Study 3: Adobe Firefly

Adobe Firefly prioritizes ethical content creation by sourcing data from licensed, legal, and ethical repositories. This safeguards intellectual property and protects creators while allowing safe commercial usage. The model illustrates how generative AI can expand creative opportunities responsibly, balancing innovation with respect for ownership and artistic integrity.

Case Study 4: UNICEF’s Magic Box

UNICEF’s Magic Box leverages AI for humanitarian aid, analyzing real-time data to support disaster relief and global health. By maintaining transparency and prioritizing human welfare, Magic Box demonstrates ethical AI use. It reflects how developers can design systems that address urgent societal challenges while minimizing unintended risks.

Case Study 5: Microsoft’s Responsible AI Standard

Microsoft integrates a Responsible AI Standard across its ecosystem, emphasizing governance, inclusivity, and long-term sustainability. This proactive framework embeds accountability at every stage of development and deployment. By adopting a forward-looking standard, Microsoft ensures its generative AI solutions serve people ethically and remain aligned with global regulations.

Case Study 6: IBM Watsonx

IBM Watsonx focuses on enterprise-level governance by embedding compliance, fairness, and oversight into large-scale deployments. Its responsible AI framework ensures businesses use generative AI ethically without compromising trust. By institutionalizing governance, Watsonx demonstrates how developers can balance innovation with accountability, making AI safer for enterprise adoption worldwide.

Looking for An AI Solution That Puts People First?

We ensure every generative AI project respects privacy, avoids harmful bias, and delivers value that benefits people and grows your business.

Lessons Learned from Real-life Case Studies

1. Establish clear, actionable ethical principles early

Successful AI projects begin with well-defined ethical principles guiding every decision. Developers should embed fairness, accountability, and transparency at the design stage rather than as afterthoughts. Early ethical generative AI frameworks provide consistent direction, reduce risks of harm, and ensure systems align with legal standards and societal expectations.

2. Curate training data carefully

High-quality, well-curated training data reduces bias and improves model reliability. Forward-looking developers must evaluate data sources for diversity, accuracy, and representativeness. Careful curation prevents harmful patterns from embedding in models, ensuring generative AI produces outputs that serve all users equitably and responsibly.

3. Prioritize governance and oversight

Governance and oversight mechanisms ensure AI development remains accountable. This includes impact assessments, regular audits, and ethical review boards. Developers should create structures where responsibility is clearly defined and monitored. Embedding oversight fosters trust, prevents misuse, and ensures AI aligns with human-centered values throughout its lifecycle.

4. Embed responsibility into the development lifecycle

Responsibility must be continuous, not a single step. From design and testing to deployment and maintenance, ethical considerations should remain central. Developers should integrate checklists, compliance tools, and red-teaming exercises into workflows. Making responsibility a lifecycle priority ensures generative AI evolves safely while protecting users and stakeholders.

5. Use AI to amplify human values

Generative AI should enhance, not replace, human contributions. Forward-looking developers design systems that reflect cultural values, creativity, and social priorities. By embedding human-centered principles, AI becomes a tool for empowerment. This lesson emphasizes that responsible AI amplifies positive human impact rather than diminishing responsibility or agency.

Balancing Innovation with Responsibility: An Edge in the 21st Century

As organizations push forward with generative AI, finding a partner who values responsibility alongside innovation is just as vital as the tools they build.

Organizations need a team that understands the technical side and the ethical, operational, and sustainability responsibilities.

Debut Infotech is a leading AI development company that combines innovation with responsibility. Beyond delivering cutting-edge solutions, we ensure every AI project aligns with ethical, societal, and sustainability standards. Our expertise covers secure data handling, bias-free model development, and long-term operational accountability.

By prioritizing responsible practices, we help businesses harness AI confidently while building user trust.

Therefore, if you’re seeking a reliable partner, we provide technology excellence without overlooking the responsibility of developers using generative AI.

Conclusion

The responsibility of developers using generative AI is clear: they must prioritize fairness, transparency, privacy, and sustainability while maintaining accountability. From designing ethical safeguards to securing data and ensuring regulatory compliance, developers act as guardians of trust.

Real-world case studies—from OpenAI to UNICEF—prove responsible practices drive positive impact. By embedding ethical principles, fostering collaboration, and committing to continuous oversight, developers can ensure generative AI remains a force for innovation that strengthens human values, empowers industries, and benefits society at large.

FAQs

Developers working with generative AI are responsible for ensuring their models are safe, reliable, and fair. That means checking for bias, protecting user data, being transparent about limitations, and setting clear boundaries on usage so the tech isn’t misused in harmful or misleading ways.

Their job isn’t just building robust systems—it’s making sure they’re used responsibly. That involves embedding fairness checks, monitoring outputs, explaining how decisions are made, and putting safeguards in place. Developers must also anticipate possible misuse and design solutions that minimize risks before problems reach real-world users.

The primary purpose is trust. Responsible practices ensure users, businesses, and society can rely on AI without worrying it’s misleading, biased, or unsafe. It’s about balancing innovation with accountability, so the technology serves people in a way that’s ethical, consistent, and aligned with shared human values.

That process is accountability. It means assigning clear responsibility when something goes wrong—whether it’s bias, misinformation, or system failure—and having a structured way to fix it. Accountability ensures that someone is answerable and that corrections happen, not just be discussed on paper.

The big five are fairness, transparency, accountability, privacy, and safety. Fairness deals with bias, transparency explains how the AI works, accountability makes sure mistakes get fixed, privacy protects user data, and safety ensures the system doesn’t create harmful or dangerous outputs.

Our Latest Insights

Leave a Comment