Table of Contents

Home / Blog / AI/ML

How Text-to-Image Models Turn Words into Stunning Visuals

February 13, 2025

February 13, 2025

For the past few years, Text-to-Image Models have been revolutionizing the world of AI by generating stunning visuals from simple text prompts. These AI-driven tools are revolutionizing content creation, making it easier than ever to produce unique, high-quality images in seconds. But why might marketers use text-to-image models in the creative process? The answer lies in their ability to streamline workflows, reduce costs, and enhance creative output.

In this article, we’ll explore how Text-to-Image Models work, dive into their fascinating history, and examine the most popular models shaping the future of AI-generated art.

The Evolution of Text-to-Image Models

Back in 2015, researchers at the University of Toronto unveiled the first modern text-to-image model, called alignDRAW. This model built upon the earlier DRAW architecture, which utilized a recurrent variational autoencoder with an attention mechanism, but with the added ability to be guided by text sequences. While the images that alignDRAW produced were rather small (only 32×32 pixels after resizing) and somewhat lacking in variety, the model did show an impressive ability to generalize.

For instance, it could generate images of objects it hadn’t specifically seen during training, like a red school bus, and it handled unusual prompts, like “a stop sign is flying in blue skies,” with surprising aptitude. This suggested that the model wasn’t simply recalling images from its training data, but actually creating something new.

At that time, neural networks started generating 2D images, though the results were still rough and unrefined. A prompt like “a sunflower in a vase” might produce a pixelated blob with vague shapes. Around this time, tools like DeepDream emerged, morphing photos into surreal, trippy art by amplifying patterns in neural networks. Apps like Prisma went viral, letting users reimagine selfies as Van Gogh or Picasso paintings, proving the public’s appetite for AI-driven visuals.

The 2018 debut of GANs (Generative Adversarial Networks) marked a leap forward. Models like NVIDIA’s StyleGAN could generate hyper realistic human faces of people who didn’t exist. That same year, an AI-generated portrait titled Edmond de Belamy sold for $432,500 at Christie’s, sparking debates about creativity and ownership.

In 2021, OpenAI’s DALL-E redefined possibilities. For the first time, prompts like “an armchair shaped like an avocado” yielded quirky, coherent 2D images. While outputs were glitchy, DALL-E demonstrated AI’s ability to grasp abstract concepts.

By 2022, models like DALL-E 2, MidJourney, and Stable Diffusion achieved photorealism. Typing “cyberpunk Tokyo at night, neon lights reflecting on wet streets” could produce images indistinguishable from human concept art. Today, tools like Adobe Firefly and Runway ML push boundaries further, enabling animations, 3D renders, and even video clips from text.

From alignDRAW’s DRAW architecture to today’s photorealistic worlds, text-to-image AI has evolved from a niche experiment to a cultural phenomenon. As ethics and creativity debates rage, one truth remains: machines are now fluent in the language of visuals.

Partner with Debut Infotech for AI-Powered Innovation!

Looking for a Generative AI Development Company to bring your ideas to life? Hire Generative AI Developers today and take your content creation to the next level!

Neural Networks in AI Image Generation

Turning a text prompt into an image with AI may feel like magic, but it’s actually a combination of mathematics and machine learning. Text-to-image models rely on multiple neural networks such as Generative Adversarial Networks, each handling a specific task. At their foundation, these models utilize Convolutional Neural Networks (CNNs), Variational Autoencoders (VAEs), and Autoencoders (AEs), all working within a diffusion-based system to generate realistic visuals.

How AI Interprets and Generates Images

To generate meaningful images, AI must first recognize objects and understand how words relate to visuals. This is where CNNs come in. Originally developed for tasks like handwriting recognition, CNNs deconstruct images into smaller sections, analyze patterns, and then reconstruct them to form an accurate representation.

Meanwhile, VAEs and AEs assist in encoding and decoding image data. The encoder compresses information into a simplified format, while the decoder reconstructs it—introducing slight variations to enhance diversity and realism in AI-generated images.

The Diffusion Process: Refining Images Step by Step

A key technique in modern AI image generation is diffusion modeling. The process begins with a noise-filled image and gradually refines it step by step until a clear and coherent picture emerges. At each stage, controlled noise is added, followed by transformations that reduce the noise while enhancing detail, ultimately forming a clear and coherent image.

This process mirrors heat conduction, where energy naturally spreads from warmer to cooler areas until an even distribution is achieved. Similarly, diffusion models refine images through an iterative process, smoothing out randomness until a structured and polished result emerges.

Now that we’ve uncovered the fundamental building blocks of AI image generation, let’s explore how these systems work together to transform text into visual art.

How Do Text-to-Image Models Work

Text-to-image AI models operate using intricate neural networks, each playing a role in transforming words into visuals. While different models may have unique designs, they all follow a similar foundational process. Let’s break down how they learn and generate images.

Training the AI: Connecting Words to Visuals

To generate realistic images from text, AI models first undergo extensive training on massive datasets containing images paired with descriptions. This process helps the system not only recognize objects but also understand how they relate to specific words and phrases. Over time, it learns the complex relationships between language and visual elements, forming the basis of text-to-image generation.

The Latent Space: AI’s Creative Playground

Rather than storing images like human memory, AI transforms visual and textual data into mathematical representations, arranging them in a multi-dimensional space where related concepts are positioned close together. This space acts as a structured map where concepts like colors, textures, shapes, and styles are positioned based on their relationships.

When a user inputs a text prompt, the AI locates its mathematical counterpart in the latent space, pulling together relevant patterns from past training. It then reconstructs these numerical values into a visual format—creating a brand-new image that aligns with the description. Although this process may appear similar to human understanding, AI doesn’t ‘see’ images like people do; it identifies statistical patterns instead.

AI Learning vs. Human Learning

A useful analogy is how young children learn. Babies spend years observing their surroundings, listening to speech, and associating words with objects. As they gain experience, they refine their ability to recognize faces, animals, and everyday items.

Later, if you ask a child to draw something they’ve never seen before—like a purple cat on a bicycle—they can imagine it by combining familiar elements. AI models function similarly, blending learned patterns to create entirely new images based on descriptive input.

Although the mechanics are complex, the outcome feels almost magical—AI turning words into visuals, bringing ideas to life in ways that once seemed impossible.

Transform Your Creative Process with AI!

Interested in seamless AI integration for your business? Get in touch with our team for expert consultation and discover how Debut Infotech can help you generate high-quality images effortlessly.

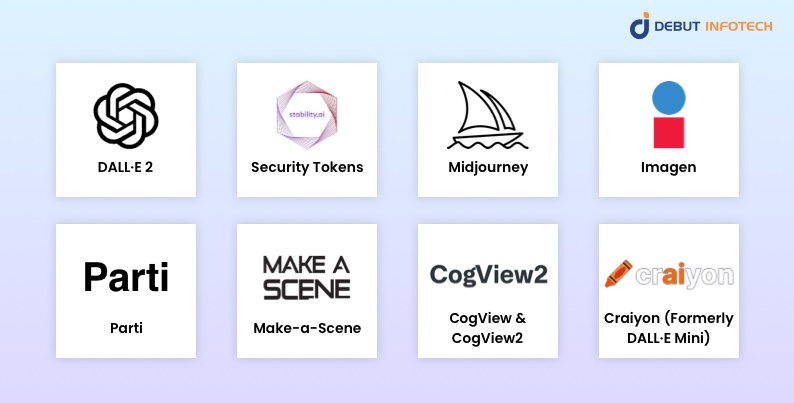

Popular Text-to-Image AI Models

AI-powered image generation is transforming digital creativity, with several models leading the way. Each model has its strengths and limitations, catering to different needs. Let’s explore some of the most widely used text-to-image AI systems.

1. DALL·E 2

Developed by OpenAI, DALL·E 2 is an advanced version of its predecessor, DALL·E (launched in 2021). This model generates highly realistic images from text prompts, expands images beyond their original boundaries, and seamlessly removes or replaces objects while maintaining consistency in lighting, shadows, and textures with Generative AI Trends.

Pros:

- High-quality, detailed images

- Ability to edit and expand existing images

- Clear copyright ownership for users

- Integrated with Shutterstock for easier access

Cons:

- Not open-source; limited customization

- Requires an internet connection to use

- Some ethical restrictions on content generation

2. Stable Diffusion

Stable Diffusion, created by Stability AI, is an open-source model known for its accessibility and flexibility. Unlike proprietary models, Stable Diffusion can be modified and allows businesses to hire generative AI developers for tailored solutions. It has gained massive popularity, with millions of daily users.

Pros:

- Completely open-source and customizable

- Can run on personal devices (if hardware is powerful enough)

- High-quality outputs with fine-tuning options

- Active community of developers and contributors

Cons:

- More complex setup compared to web-based models

- Can be used for controversial or unauthorized purposes

- Legal concerns regarding training data and copyright

3. Midjourney

Midjourney is an AI model operated by an independent research lab. Unlike other models, it is accessed through Discord, where users type prompts and receive AI-generated images. Its artistic style tends to be more painterly and dreamlike, making it a favorite among digital artists.

Pros:

- Produces unique, high-quality, artistic images

- Accessible through Discord with an easy-to-use interface

- Regular updates and improvements by developers

- Great for concept art and storytelling visuals

Cons:

- No official transparency about its architecture

- Requires Discord to use, limiting accessibility

- Potential legal issues regarding copyrighted training data

4. Imagen

Developed by Google, Imagen was introduced as a competitor to DALL·E 2. It boasts exceptionally high-quality image generation, often outperforming other models in detail and realism. However, Google has been hesitant to release it widely, citing concerns over potential misuse. Currently, Imagen is only available in a controlled research environment.

Pros:

- One of the highest-quality AI models in image realism

- Developed with strong ethical considerations

- Uses advanced diffusion techniques for better texture and detail

Cons:

- Not publicly available beyond limited research access

- Strict content moderation policies

- Lack of customization or community-driven development

5. Parti

Another AI model by Google, Parti differs from diffusion-based models by using an autoregressive approach, treating image generation like a translation task. This technique breaks an image into tokens and reconstructs it similarly to how language models generate text.

Pros:

- Capable of generating highly detailed images

- Unique approach compared to diffusion-based models

- Designed for more structured and complex prompts

Cons:

- Not available to the public

- Still in research phase with limited testing

- No customization options for developers

6. Make-a-Scene

Developed by Meta, Make-a-Scene is a hybrid model that allows users to combine freehand sketches with text descriptions for better creative control. This approach is aimed at enhancing user input, making it a more interactive tool for artists.

Pros:

- Allows sketch-based guidance alongside text prompts

- More user control compared to traditional AI models

- Intended for creative professionals and artists

Cons:

- Not publicly available yet

- Limited details on future accessibility

- Lack of large-scale testing and real-world use cases

7. CogView & CogView2

Developed in China, CogView2 is an open-source AI model trained with 6 billion parameters, allowing for interactive, text-guided image editing. However, at the time of writing, its web demo was unavailable.

Pros:

- Supports interactive text-based image editing

- Open-source with a growing community

- Can generate Chinese-language image prompts

Cons:

- Limited availability outside of China

- CogView (previous version) only supports Chinese prompts

- Not as widely adopted as Stable Diffusion or DALL·E

8. Craiyon (Formerly DALL·E Mini)

Originally known as DALL·E Mini, Craiyon was renamed to avoid confusion with OpenAI’s proprietary models. Created by Boris Dayma, Craiyon became an internet sensation due to its quirky, often humorous AI-generated images, making it a meme favorite on social media.

Pros:

- Easy to use and freely accessible

- Can generate a wide range of images quickly

- No sign-up required

Cons:

- Lower image quality compared to advanced models

- Limited artistic control and customization

- Can produce distorted or unrealistic results

How Debut Infotech Can Help with Text-to-Image AI Solutions

At Debut Infotech, we specialize in Generative AI Development services to help businesses integrate AI-powered creativity seamlessly. Here’s how we can support your business:

- Tailored AI Models

We build custom text-to-image models designed to reflect your unique brand, whether for marketing, product design, or social media content.

- Effortless Integration

Our team seamlessly integrates AI into your existing systems, enhancing your workflow with text-to-image models like DALL·E 2 or Stable Diffusion.

- High-Quality Content Creation

From concept art to promotional visuals, we deliver diverse, high-quality images that meet your creative needs.

- Ethical and Legal Compliance

We ensure your AI-generated images comply with legal standards, protecting your intellectual property and maintaining transparency.

- Ongoing Support

Our team offers expert consultation with leading Generative AI Consultants, troubleshooting, and continuous model optimization to ensure your AI stays ahead.

- Custom Training

Need more precision? We refine models to work with your unique data, creating even more accurate and context-specific images.

Debut Infotech empowers businesses to revolutionize content creation with cutting-edge text-to-image AI. Let us help you stay ahead and create stunning visuals that resonate with your audience. The future of content creation starts now – let’s bring your ideas to life!

Frequently Asked Questions (FAQs)

A. Marketers use text-to-image models because they streamline content creation and provide unique, visually appealing results. These models empower marketers to rapidly produce visuals, resulting in a smoother and more imaginative workflow.

A. Text-to-image models are typically based on something called latent diffusion. They work by teaming up a language model, which turns the text you feed it into a kind of hidden code, with an image generator, which then uses that code to create a picture.

A. Text-to-image AI models typically use artificial neural networks, a machine learning method that processes text and generates images. These networks take in words as input and then churn out an image. The whole thing wraps up in just a few seconds, letting you see your “creation” right away.

A. According to research, this number usually ranges from 200 to 600 images. However, it can vary based on the concept, the model itself, and the data used for training.

A. Optical Character Recognition (OCR) is used to analyze text in images.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment