Table of Contents

A Comprehensive Guide to Generative AI Development

October 14, 2024

October 14, 2024

Generative AI is creating a new frontier in technology, hinting at a future where AI-generated content is normal. This new innovation is unlocking new possibilities and solving complex problems. From personalized media to automated data analysis, the possibilities are vast and transformative. Generative AI’s exponential growth is driven by strong investment and broad implementation across sectors.

The global generative AI market, worth USD 13.0 billion in 2023, is projected to grow at a 36.5% CAGR from 2024 to 2030. This rapid growth shows growing reliance on AI that generates new content and solutions, from digital images to business strategies. Factors like expanding applications in super-resolution, text-to-image, and text-to-video conversion, along with workflow modernization across industries, are driving generative AI demand in sectors like media, retail, manufacturing, IT, and telecom.

In this comprehensive guide, expect to uncover generative AI development from foundational models to real-world applications and deployment nuances. Whether you’re an industry professional, developer, or AI enthusiast, this journey will deepen your understanding and may inspire your next big project in generative AI.

Ready to transform your business with custom AI solutions?

Discover how generative AI can streamline workflows, create personalized experiences, and boost innovation with AI-driven transformation.

Understanding Generative AI

Generative AI is a subset of AI technologies that generate new, distinct data instances—text, images, or sounds—similar to their training data. This technology uses machine learning to create content that resembles the original in form and feature but introduces new variations. This field is rapidly growing due to its potential to innovate and automate content creation across various domains.

Generative AI development often involves sophisticated modeling that pushes technology’s boundaries. This is because it requires continuous innovation and adaptation.

Generative AI applications vary widely, from writing articles and composing music to creating virtual training environments for AI models. This broad utility makes generative AI tools crucial in both commercial and research settings, fostering a new era of AI-driven innovation.

Generative AI excels in learning from complex datasets to produce new, yet naturalistic outputs. This capability makes generative AI essential for creating new, unique content, positioning it as a cornerstone in advanced AI development.

Core Concepts in Generative AI Development

Generative AI has evolved from academic theory to a foundational technology in various fields. This AI aspect develops innovative algorithms, offering solutions in healthcare, entertainment, and automotive.

Developers refining generative AI models focus on improving accuracy, creativity, and real-world utility. This continuous evolution in this field underscores its dynamic improvement. It necessitates ongoing collaboration among developers, generative AI development companies, and stakeholders to enhance system utility.

The development phase is critical, as it involves rigorous testing and adaptation to ensure that the generative models are not only functional but also efficient and ethically sound.

Key Technologies and Algorithms of Generative AI

Foundational to generative AI development are technologies like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). These technologies represent the backbone of how generative AI operates and evolves. Exploring generative AI use cases reveals how these technologies are transforming sectors by offering solutions that were previously unattainable:

1. Generative Adversarial Networks (GANs)

GANs are crucial in generative AI, featuring two competing models, a generator and a discriminator. This setup refines outputs to closely mimic real data, making GANs essential for creating lifelike images or videos. Developing GANs requires significant expertise from companies specializing in tuning these networks to produce desired, sensible outputs.

2. Variational Autoencoders (VAEs)

VAEs are key for projects that require capturing and reproducing the essence of data, like in voice synthesis or stylized content creation. Unlike GANs, VAEs encode data into a latent space and decode it to generate new items, efficiently handling and transforming large datasets in generative AI models.

Both technologies require advanced machine learning skills and deep knowledge of neural network architectures, making skilled generative AI developers crucial for successful projects. Collaborating with experienced generative AI companies provides the expertise and resources needed to effectively navigate these complex technologies.

Comparison of Generative AI and Traditional Machine Learning

While traditional machine learning models interpret and classify data, generative AI goes further by analyzing and also creating new data that mimics real-world data. Traditional machine learning uses defined targets and relies on historical data for predictions.

In contrast, generative AI creates new data instances, expanding machine capabilities in creativity and innovation.

Generative AI models require a unique training approach compared to traditional machine learning. They need larger and more varied datasets to learn effectively and typically have more complex architectures. This complexity helps them grasp deeper data nuances while increasing demands on computing power and training time. Generative AI vs. Machine Learning showcases a unique AI approach, extending capabilities in creativity and innovation beyond traditional models.

In AI development, the shift from using AI for decision-making with existing data to enabling AI to create new content marks a significant evolution. This shift not only expands AI’s capabilities but also introduces new considerations in AI development and deployment.

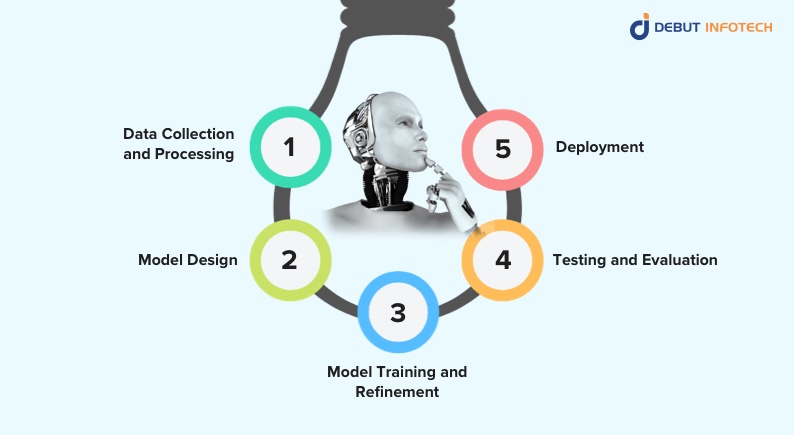

The Development Lifecycle of Generative AI Systems

The development of generative AI follows a structured life cycle with crucial stages. Each stage requires specific techniques and careful consideration to ensure the models’ effectiveness and reliability. Successful generative AI deployment integrates these models into existing systems for seamless adaptation and optimal performance.

1. Data Collection and Processing

The base of any generative AI model is robust, diverse data. Gathering a comprehensive dataset that reflects real-world diversity is essential.For example, NVIDIA’s research on generative models for synthetic images begins by collecting vast image data, which is then carefully annotated and processed to effectively train the models. This stage includes techniques like data augmentation and normalization to improve the diversity and quality of the training data.

2. Model Design

This stage involves choosing the right architecture, like GANs or VAEs, based on project needs. The design is crucial as it determines the model’s efficiency and output quality. Designing a model includes configuring neural networks with the optimal number of layers and connections to balance performance and learning speed.

3. Model Training and Refinement

Training generative AI models is computationally demanding and involve meticulous tuning of parameters like learning rates and optimizer settings. For example, OpenAI’s development of DALL-E illustrates the iterative training of generative models on curated datasets, with subsequent testing and refinement rounds to reduce errors and improve generative capabilities.

4. Testing and Evaluation

After training, the model undergoes rigorous testing to ensure it generates new data that meets set quality and relevance standards. Metrics like FID (Fréchet Inception Distance) quantitatively assess how realistically generative models produce outputs.

5. Deployment

This final stage places the trained model into a production environment to generate real-world value. It typically involves integrating with existing systems and developing user interfaces for interacting with the AI.

Techniques and Best Practices in Designing and Training Generative Models

When designing and training generative AI models, following best practices ensures system robustness and functionality:

1. Regularization Techniques

To avoid overfitting, using dropout, batch normalization, and data augmentation is crucial. These methods enhance the model’s ability to generalize, improving performance on new, unseen data.

2. Hyperparameter Tuning

Systematically adjusting hyperparameters can greatly affect model performance. Employing automated tools for hyperparameter optimization can quickly and efficiently identify optimal settings.

3. Continuous Monitoring and Updating

Generative AI models may drift due to new data or environmental changes. Regular monitoring and retraining with updated data help maintain model accuracy and relevance.

Tools and Technologies in Generative AI Development

The development of generative AI models depends on advanced software, frameworks, and platforms that provide the infrastructure and capabilities needed to design, train, and deploy AI systems.

Here are some key tools widely used in the industry:

1. TensorFlow and Keras:

TensorFlow, developed by Google, is a popular open-source library for numerical computation that simplifies and accelerates machine learning. TensorFlow, paired with its high-level API Keras, is widely used to build and train generative models due to its flexibility and robust scaling capabilities. Keras simplifies the creation of deep learning models with its user-friendly interfaces.

2. PyTorch

PyTorch, from Facebook AI Research, offers ease and flexibility in complex model design. PyTorch is favored in academic research for generative AI, with a large community contributing to its development.

3. GANs & VAE Libraries

Libraries like `tensorflow-gan` for TensorFlow and `torchgan` for PyTorch offer tools and models for developing GANs and VAEs. These libraries provide modules that simplify implementing complex models, making it easier for developers to experiment and innovate.

How These Tools Are Applied in Different Stages of the Development Process

Here’s how the generative AI tools are applied, especially in different stages of development:

1. Data Preparation and Preprocessing

Pandas handles data manipulation, while NumPy is all about numerical data operations. These tools clean, transform, and ready data for training. TensorFlow and PyTorch also enhance training data diversity and model robustness with built-in data augmentation.

2. Model Training

TensorFlow and PyTorch provide extensive and efficient training with automatic differentiation and GPU acceleration. Frameworks like CUDA and cuDNN enhance hardware performance, speeding up computations in training.

3. Model Evaluation and Testing

MLflow and TensorBoard track, visualize, and compare model performance. These tools are crucial for evaluating how well the models generate new data and identifying areas for improvement.

4. Deployment

Docker and Kubernetes scale AI models across environments and platforms for effective deployment. TensorFlow Serving and TorchServe efficiently deploy deep learning models in production environments.

Generative AI Development Challenges

Generative AI development presents several key challenges affecting model performance and applicability. Here they are in no particular order:

1. Data Biases

Generative AI models may replicate or amplify biases from their training datasets. For example, an MIT study showed that facial recognition technology from major tech companies had higher error rates for women and people of color due to biased training data.

This negatively impacts the fairness, reliability, and acceptance of AI applications in diverse markets.

2. Model Instability

GANs are known for training instability. Without proper tuning, they may experience mode collapse, where neither the generator nor discriminator improves. This results in the generation of limited or repetitive outputs.

Strategies to Overcome These Challenges

Addressing generative AI development challenges requires strategies to create robust, fair, and stable systems:

1. Enhancing Dataset Diversity

To reduce data biases, use diverse datasets that reflect real-world demographics. This requires collecting varied data and using synthetic data generation to fill gaps in existing datasets.

2. Advanced Training Techniques

To address model instability, developers can use techniques like curriculum learning or ensemble learning. Curriculum learning involves models getting trained on progressively challenging tasks. Ensemble learning, on the other hand, is where multiple models undergo training. Then, aggregate the outputs from both techniques to boost stability and performance.

3. Regular Auditing and Testing

Conduct audits and evaluations throughout development to catch biases early. IBM’s AI Fairness 360 is an open-source library for detecting and mitigating biases in machine learning models.

4. Adopting Ethical AI Frameworks

Develop guidelines that ensure fairness and inclusivity in AI deployment. For instance, Google’s AI Principles commit to avoiding and countering unfair bias, a standard many organizations adopt in AI development.

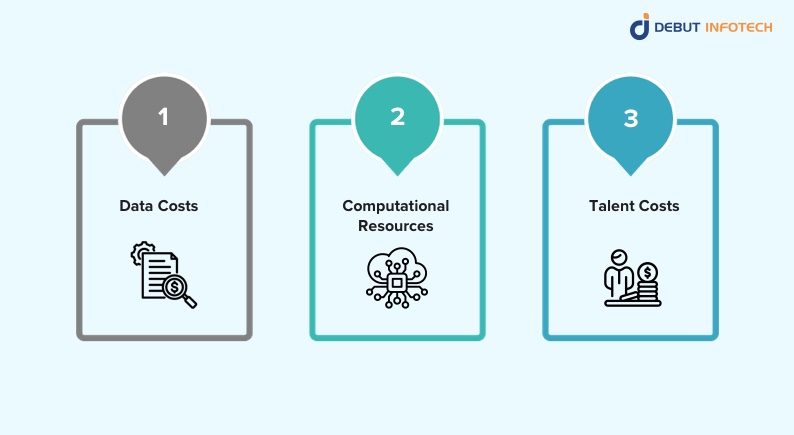

Cost Considerations in Generative AI Development

Developing generative AI systems requires substantial financial investment, influenced by the project’s scope and complexity. Costs are affected by data quality, required computational resources, and expertise needed for design, training, and deployment.

Below are instances that showcase how costly developing generative AI systems are:

1. Data Costs

Acquiring high-quality, diverse datasets can be expensive. For companies lacking internal data, buying data or investing in data generation and collection can be a major expense.

2. Computational Resources

Generative AI models, such as GANs, require significant computational power. Training these models often requires high-performance GPUs or cloud computing services, incurring significant costs. For instance, training OpenAI’s sophisticated GPT-3 model is estimated to have cost millions, mainly due to compute resources.

3. Talent Costs

Developing generative AI requires highly specialized expertise. To hire generative AI developers, companies must invest in experienced data scientists, AI engineers, and domain experts, which can be costly given the competitive tech industry market.Staying up-to-date with the latest generative AI trends is also crucial for efficient budgeting and strategic planning. This ensures resources are allocated wisely and projects align with recent technological advances.

Budgeting, Resource Allocation, and Cost Optimization Strategies

Effective cost management is key for successful generative AI development projects. Consider these strategies:

1. Phased Implementation

Implementing the development in stages can help control costs better. This approach allows teams to evaluate the project’s feasibility and potential ROI at each stage before additional investments are made.

2. Use of Open-Source Tools

Using open-source libraries like TensorFlow and PyTorch can significantly cut software licensing and development costs.

3. Cloud Computing

Using cloud services can reduce the need for expensive hardware, saving costs. Understanding the cost of generative AI development is crucial for accurate budgeting and resource allocation.

Cloud providers offer scalable resources and tools for AI development, helping to reduce costs. Platforms like AWS, Google Cloud, and Microsoft Azure offer pricing models to fit different budgets and project needs.

4. Optimizing Model Efficiency

Enhancing AI model efficiency can reduce the computational resources needed. Techniques like model pruning, quantization, and transfer learning can cut training and inference costs while maintaining performance.

5. Partnerships and Collaborations

Partnering can distribute costs and risks in generative AI projects. Collaborations with academic institutions, industry consortia, or generative adversarial networks can offer access to resources like talent and computational power at lower rates.

Budgeting and cost management are crucial for the success of generative AI projects. Strategic financial planning helps companies handle development complexities while

maximizing the impact and reach of their AI solutions. Working with a firm that offers generative AI integration services makes everything easier.

Empower your business with generative AI development; drive personalized experiences, streamlined workflows, and accelerated growth at Debut Infotech.

Deployment and Integration of Generative AI Models

Deploying generative AI models requires careful planning to ensure scalability, reliability, and security. Here are some best practices:

1. Model Versioning and Management

Tracking model versions is crucial for maintaining deployment integrity. Tools like MLflow or ModelDB manage model versions and record training parameters and metrics, which are essential for compliance and auditing.

2. Continuous Integration and Deployment (CI/CD) for AI

Implementing CI/CD streamlines updates and deployments, ensuring reliable rollout of improvements and fixes. Automated pipelines reduce error risk and help maintain consistency throughout deployment stages.

3. Monitoring and Logging

It’s crucial to continuously monitor the model’s performance against real-world data once deployed. Monitoring tools like Prometheus or Grafana can track model performance metrics when integrated. Logging anomalies and failures helps quickly identify issues that testing may not reveal.

4. Scalability Considerations

It’s essential that generative AI models handle varying loads effectively. Using scalable cloud services like Amazon S3 for storage, EC2 for compute, or Google Kubernetes Engine for application management helps efficiently handle load variations. This is another reason you should consider partnering with a leading generative AI development company with excellent track records in this field.

Transform Your Business with Custom AI Solutions

Unlock creativity, efficiency, and scalability with our expert generative AI solutions. Let us help you shape the future of your business with tailored AI strategies.

Conclusion

Generative AI development is transforming innovation across industries like healthcare, finance, and entertainment. By grasping the core technologies, development lifecycle, and best practices, organizations can unlock the transformative potential of these AI systems. As we continue to advance and refine these technologies, the potential for generative AI to drive significant progress and efficiency is immense and ever-expanding.

Frequently Asked Questions

Q. What programming language is used in generative AI?

The following are the 4 coding languages used for generative AI/AI technologies: C++, Java, Python, and Julia.

Q. How do I become a generative AI developer?

You need to gain or have a strong foundation in Machine Learning, Mathematics, and Computer Science. You must also be proficient with PyTorch or TensorFlow, i.e. deep learning frameworks. Lastly, engage in projects involving generative models such as VAEs, GANs, or transformers.

Q. What is the difference between OpenAI and generative AI?

OpenAI is an organization that promotes and creates friendly AI. It focuses on ensuring every AI-powered tool is free from bias. Generative AI depicts the technology and technique used in generating new information.

Q. Is chatbot a generative AI?

Chatbot only interfaces with generative AI as it can summarize, recognize, predict, create, and translate content, often in response to a user’s query, with zero human intervention.

Q. Does ChatGPT use generative AI?

ChatGPT is a unique type of generative AI that assists users with information retrieval and content creation.

Talk With Our Expert

Our Latest Insights

USA

Debut Infotech Global Services LLC

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment