Table of Contents

Home / Blog / AI/ML

AI Legal Compliance 101: Essential Insights for AI Companies

June 6, 2025

June 6, 2025

Artificial intelligence has been listed as a top technology trend for several years now, mainly because of its role in industries big on data such as retail, transportation, education and entertainment. However, it’s only recently within the past few years that AI has started attracting many regular users. With the help of AI tools like image and text generators, individuals can immediately make content with the tap of a button.

It might seem like these tools generate content independently, but they do not really do that. They depend on huge collections of text and pictures taken from the internet which are used for their training. While this process is convenient and simple, it also raises important legal problems. Problems like taking someone else’s content, misusing open-source items and infringing on intellectual property rights are happening more frequently.

Because these risks are recognized, authorities in many countries are putting rules in place and creating new penalties for generative AI misuse. Companies that build AI systems should be mindful of these legal problems and strive to make their solutions ethical and within the law, prioritizing AI legal compliance.

This article will look into the key aspects of AI compliance in software development such as the legal risks, ways businesses can prepare for changes and the AI laws being introduced worldwide.

Without further ado, let’s delve in!

What Is AI Compliance?

Imagine that you are behind the wheel of a car on a highway. You need to watch your speed, use traffic signals and go through safety checks on your car for everyone’s safety. The reason for these rules is to safeguard everyone who is sharing the roads and not to limit your freedom. In the world of technology, AI legal compliance plays a similar role for artificial intelligence (AI) systems.

AI compliance is about making sure that AI systems are in accordance with laws, rules and ethical norms. These guidelines are set in place by governments and regulatory bodies so AI is built and applied safely, justly and with respect for human rights.

Why are Special Rules Necessary for AI?

- AI impacts important decisions: AI systems may make decisions concerning credit, jobs, healthcare and similar matters. In practice, an AI tool could be used to determine if someone is eligible for a loan. With compliance, decisions are less likely to be unfair or show bias.

- AI relies on personal data: Since AI frequently processes peoples’ private data, including health records and shopping history, compliance helps ensure privacy and data rights are protected.

- AI can lack clarity: It can be tricky to figure out what makes AI algorithms take a certain conclusion. Having strong compliance allows for simple and clear explanations.

- AI can act unpredictably: It is possible that advanced AI will behave in ways that are not expected. As an example, without proper supervision, an AI chatbot could produce harmful or misleading information. Compliance makes it easier for organizations to detect and deal with such risks, ensuring robust AI legal compliance.

Turn AI Regulations Into Your Strategic Edge!

Stay ahead of rules, not behind. Deploy AI that builds trust and revenue seamlessly.

What Areas do AI Regulations Typically Address?

- Transparency: Revealing how the AI system operates and explaining the process behind each decision. For instance, an AI that assesses credit scores must clearly explain which elements influence its scoring.

- Accountability: Recognizing who is accountable for the actions or errors made by AI. If an autonomous vehicle is involved in an accident, it is important to know who is responsible.

- Ethical use: Ensuring AI is used in ways that respect social values and do not cause any harm, for example, withholding AI support for systems that infringe privacy rights.

- Privacy protection: Ensuring that personal information is secure and following user consent, for instance by encrypting sensitive healthcare data utilized in AI through robust AI data security measures.

- Safety: Making sure AI performs reliably and securely, such as testing a medical AI system thoroughly before use to avoid errors in diagnosis.

- Fairness: Reducing possible discrimination or bias in the outcomes of AI systems. For example, taking steps to avoid an AI discriminating against those of a particular ethnicity.

Ultimately, AI in legal compliance is not meant to stop us from innovating; it’s there to ensure our work is guided in the right way. Like traffic laws add safety to roads while allowing people to drive normally, AI compliance ensures we can use AI smoothly and safely.

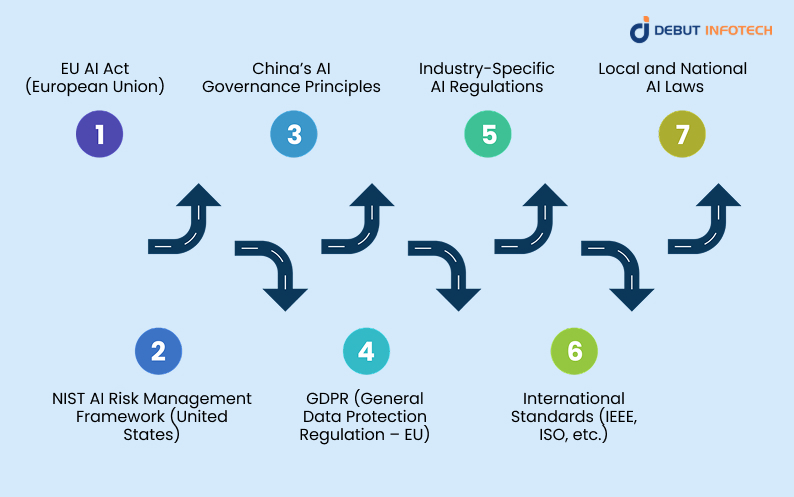

Major AI Regulations and Frameworks Around the World

Just like each country has different traffic rules, AI regulations differ around the world as well. As AI grows and improves, the rules for its responsible use also change. Here’s a quick overview of some of the most influential AI regulations and frameworks:

1. EU AI Act (European Union)

What it is: A regulation put in place by the European Union to manage how artificial intelligence is handled throughout its member states.

Recent Developments:

- Came into force on August 1, 2024.

- As of February 2, 2025, certain prohibitions (e.g., biometric surveillance) and AI literacy provisions are now in effect.

- From August 2, 2025, rules for general-purpose AI models and governance structures will be enforced.

- A Code of Practice for foundation model developers is being finalized to help with compliance.

Key points:

- Bans AI applications that are deemed too risky.

- Imposes strict rules on “high-risk” AI systems (e.g., in hiring, law enforcement, or education) and mandates specific measures for AI legal compliance.

- Requires transparency and protects user rights.

2. NIST AI Risk Management Framework (United States)

What it is: A framework designed by the National Institute of Standards and Technology (NIST) that helps companies assess and manage AI-related risks.

Recent Developments:

- In July 2024, NIST released a Generative AI Profile, a guide for managing risks specific to generative AI technologies like ChatGPT (which are fundamentally powered by LLM model architectures).

- Executive Order 14179 (January 2025) aims to streamline AI development while eliminating outdated restrictions.

- Some states like Montana have passed their own laws requiring transparency and human oversight in AI used by government agencies (directly affecting AI in legal research regulatory compliance for public sector applications).

Key points:

- Encourages identifying, mitigating, and monitoring AI risks.

- Promotes ongoing system improvement.

3. China’s AI Governance Principles

What it is: A set of ethical rules made by the Chinese government that directs how AI technologies should be developed and used.

Key points:

- Advocates for harmony between humans and AI.

- Emphasizes fairness, transparency, and accountability.

- No major updates have been announced recently, but the principles remain a core influence in China’s AI policy.

4. GDPR (General Data Protection Regulation – EU)

What it is: A foundational privacy law in the EU that has a major impact on AI systems working with personal data, shaping AI legal compliance globally.

Recent Developments:

- GDPR works alongside the AI Act to enhance protections around automated decision-making.

- The “right to explanation” is being reinforced under the new AI regulations.

Key points:

- Gives users control over their personal data.

- Requires informed, explicit consent for data collection and use.

- Guarantees transparency in automated decisions.

- Compliance is essential for AI development services operating in or targeting the EU market.

5. Industry-Specific AI Regulations

In some industries, laws and guidelines are put into place that control the way AI is used.

Recent Developments:

- The Big Four accounting firms are creating AI assurance services to verify the safety, fairness, and transparency of AI systems, especially in healthcare and finance.

- No major legislative changes to HIPAA or Basel III yet, but scrutiny over AI use in these sectors is increasing.

6. International Standards (IEEE, ISO, etc.)

What they are: International organizations create these non-binding standards which are respected to ensure AI agents are safe and ethical.

Recent Developments:

- In September 2024, over 50 countries signed the Council of Europe’s Framework Convention on AI, committing to align AI use with human rights, democracy, and the rule of law.

Key points:

- Promote transparency, safety, and accountability, particularly for AI and compliance in legal research.

- Help organizations align AI development with ethical and legal standards.

7. Local and National AI Laws

Along with international and federal efforts, local communities are making their own laws to manage regional issues.

Recent Developments:

- In the U.S., over 260 state lawmakers have opposed a federal proposal for a 10-year moratorium on state-level AI laws, arguing for the need to tailor laws to local needs.

- India’s Odisha state passed its AI Policy 2025 in May, focusing on responsible AI use, infrastructure, and skill-building. Implementing such policies effectively often requires partnering with specialized AI consulting firms.

Key points:

- Local laws often target specific issues like facial recognition or AI in policing, introducing unique AI legal compliance requirements.

- Push for ethical AI aligned with cultural and societal values.

How to Build an AI Model That Meets Compliance Standards

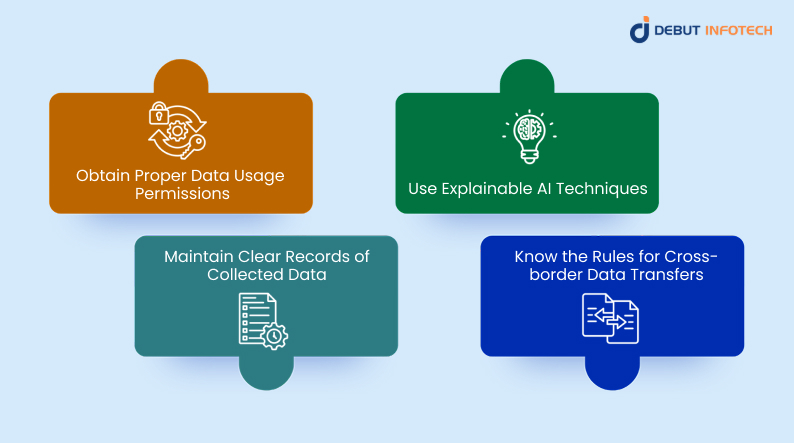

As regulations for AI are constantly changing worldwide, businesses should make AI legal compliance a top priority when building AI tools. Here are some main steps that will allow your project to comply with regulatory expectations if you use AI development services:

Obtain Proper Data Usage Permissions

Laws and regulations require that user privacy should be at the heart of designing AI systems. As a result, gather only essential data, explain the purpose of the data collection and state how much time the data will be kept. Above all, consent from users is key before any data can be gathered.

Use Explainable AI Techniques

Explainable AI addresses the “black box” issue, common in complex machine learning (ML) models, by letting users understand how the model arrives at its decisions. With this clarity, developers can ensure that they do not collect any more data than is needed, meeting data minimization requirements.

Maintain Clear Records of Collected Data

It is important for businesses to understand exactly where personally identifiable information (PII) can be found and how it is being handled. For privacy to be maintained, particularly in AI in legal research regulatory compliance, data should be set up in the right way and suitable labels should be applied. It is also important for organizations to identify which datasets contain specific personal information so they can provide effective security.

Know the Rules for Cross-border Data Transfers

If your artificial intelligence technology involves sharing data internationally, be sure to follow the legal rules in every country it goes to. When using GDPR, it is important to do a Transfer Impact Assessment each time you transfer personal data to a non-EEA country to ensure it is handled correctly.

These best practices can help reduce the dangers in building and using AI. Nevertheless, it’s important to understand that complete risk reduction is not always achievable because regulatory rules change depending on the situation. In such cases, AI risk managers assess the situation and decide if intervention should take place.

Need AI Compliance Built for Your Business?

Let our experts craft a custom roadmap, build trust, and deploy AI ethically for you. Let’s kick start!

Final Thoughts

AI and legal compliance will require a fast-changing approach to keep in tune with the constant progress of technology. Rules that govern AI must be dynamic and centered on ethics, transparency, and accountability to address biases and ensure fair operations.

For this to occur, countries need to cooperate closely. A standard global approach to AI compliance can fill in gaps in regulation and encourage innovation worldwide. When countries cooperate, they can establish common rules that guard the public’s interests without slowing down technology.

Policymakers must cooperate closely with a mix of experts and AI development companies to achieve this process. Together, their perspectives will play a major role in solving issues related to data privacy, cybersecurity and liability. With all sides collaborating, AI risks will be lower and opportunities will be provided more equally to people.

Going forward, balancing innovation with safeguards will make certain that AI development is ethical, meets the needs of all and lasts.

Frequently Asked Questions (FAQs)

A. AI legal compliance means developing policies and actions that bring businesses within the laws and regulations controlling AI systems. It also means following the law, following governing regulations and making rules within the organization to encourage ethical AI development.

A. While AI transforms how businesses operate, compliance professionals must protect data privacy, try to address algorithmic biases and control risks linked to third-party firms (especially during AI Chatbot development), while simultaneously ensuring AI is used in an ethical and open way.

A. Conformance is a choice, but compliance is required by law or regulation. While legal enforcement looks for cases of non-compliance, the manager responsible for auditing audits the client’s records to measure their compliance with the standard.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-703-537-5009

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-703-537-5009

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

C-204, Ground floor, Industrial Area Phase 8B, Mohali, PB 160055

9888402396

info@debutinfotech.com

Leave a Comment